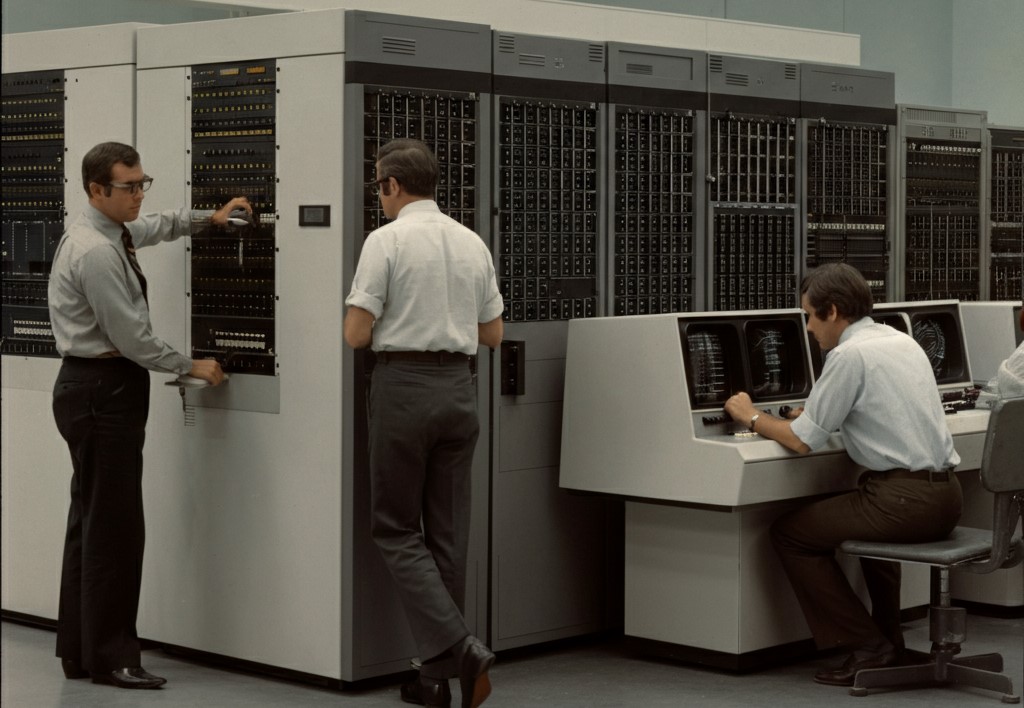

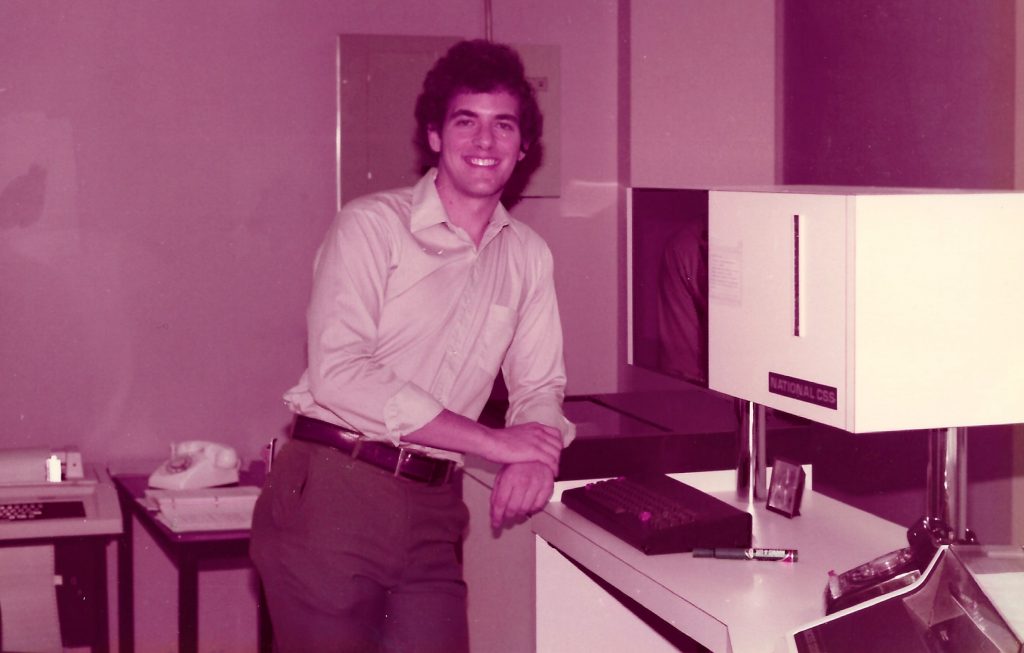

An image recently appeared on my computer, taking me back to 1979—my first year in IT as an operator on a National CSS (NCSS) 3200. Nicknamed the “mini-370,” it had more memory than IBM’s System/370 and ran VP/CSS, an advanced version of IBM’s CP/CMS developed by NCSS. IBM later incorporated VP/CSS’s innovative architecture into CP/CMS, which was far ahead of its time. Later, NCSS simplified the name by referring to VP/CSS as CP/CMS.

First Virtual Machines

In this role, I learned about virtual machines (VMs), a key innovation in modern cloud computing. CP/CMS utilized a control program to fully virtualize the underlying hardware, enabling the creation of multiple independent virtual machines. Each user was provided with a dedicated virtual machine, operating as a standalone computer capable of running compatible software, including complete operating systems. This approach let programmers share hardware, test code, and refine work in isolated virtual environments.

VP/CSS stood out for supporting far more interactive users per machine than other IBM mainframe operating systems of the time. This performance likely influenced IBM’s decision to add virtualization and virtual memory to the System/370, responding to the commercial success of National CSS and its time-sharing model.

Coding Goes Online

Back in the day, programming was a meticulous and labor-intensive process. Code was first handwritten on programming sheets, then transcribed onto punch cards. COBOL programmers were restricted to running only one or two compilations per day because the NCSS 3200 was primarily dedicated to production tasks. A single error on a punch card could set back an entire day’s progress. My role involved feeding these punch card decks into the NCSS 3200 for compilation, a critical yet unforgiving task.

Over time, we adopted a more interactive approach, allowing developers to edit and test COBOL code in real-time. While punch cards remained a tool for initial input, programmers could effortlessly refine, edit, and recompile their work within virtual CMS environments, streamlining the entire process. A symbolic debugger also let them input test data and debug interactively—a revolutionary feature at the time.

The CMS platform greatly enhanced development flexibility, supporting both standard IBM COBOL compilers and the 370 Assembler. This efficient environment helped programmers work more effectively, streamlining development and enabling groundbreaking innovations.

It’s remarkable how the principles of virtualization, introduced in the 70’s, have endured and become essential to modern computing. These early systems and visionary minds revolutionized development and paved the way for today’s technologies.

My Start as a Programmer

The NCSS 3200, pictured above, was where I first learned to program in COBOL and Fortran—an experience that shaped my career in technology. It led to job offers from companies like Aetna (now CVS), CIGNA, and Desco Data Systems. At 20, I entered the programming world with excitement and ambition, ready for the opportunities ahead.

I clearly remember the interviews with Aetna and Desco, each leaving a strong impression with very different recruitment approaches. At Aetna, the process was polished and welcoming. A senior executive greeted me warmly and took me to lunch in their elegant dining room at the Hartford, CT, headquarters. The conversation was cordial, free of challenging questions, and seemed designed to emphasize the prestige of their organization. Soon after the meeting, I was offered a programmer analyst position with a starting salary of $14,500 per year—generous for the time.

Desco provided an entirely different experience. Upon arrival, I was ushered into a cramped, cluttered conference room without much ceremony. After a short wait, I was given a worksheet with 20 logic and algebra problems—no instructions or time limit. I did my best, knowing I wouldn’t solve them all. Later, I met with an HR representative who asked me to explain my thought process. It became clear that my reasoning had left an impression. Not long after, Desco extended me an offer, though the starting salary—$12,700—fell short of what Aetna had proposed.

Ultimately, I chose Aetna for its higher pay, a decision I’ve never regretted. That choice marked the start of a fulfilling career that profoundly shaped both my professional journey and personal growth. Reflecting on those early days, I’m deeply grateful for the experiences and opportunities that came my way. Working on innovative systems like the NCSS 3200 taught me programming fundamentals and provided lessons that still inspire me today.

Mainframe Evolution

Over the years, I’ve learned a lot, and it’s fascinating to reflect on how far technology has come. In 1979, the IBM/370 had 500 KB of RAM, 233 MB of storage, and ran at 2.5 MHz. This massive machine occupied an entire room. By today’s standards, it could barely store a small photo collection—and accessing those files would be painfully slow.

Fast forward to now: IBM’s cutting-edge Z16 mainframe is a marvel of modern engineering. It can hold 240 server-grade CPUs, 40 terabytes of error-correcting RAM, and petabytes of redundant flash storage. Built for handling massive data with 99.999% uptime, it has less than five minutes of downtime per year.

The evolution is staggering. It’s no wonder the mainframe is experiencing a resurgence—or perhaps it never truly disappeared. This versatile machine has adapted to the changing times, evolving from a bulky production-focused system to a sleek, high-performing powerhouse. Today, mainframes are used for everything from running banking systems and air traffic control to powering e-commerce giants like Amazon. And with advanced features like virtualization and cloud integration, they continue to push the boundaries of what’s possible.

Bridging the Gap Between Old and New

One of the biggest impacts of mainframe technology is its ability to connect old and new systems. Many organizations want to adopt newer technologies but struggle to integrate them with legacy applications and mainframe data. Modern efforts like cloud integration and DevOps allow mainframes to remain crucial for seamless operations.

In conclusion, my career has come a long way since 1979 and so has the world of mainframe technology. From learning to program on an NCSS 3200 to working with cutting-edge systems, I’ve seen how this powerful technology has evolved and made an impact. As we push the boundaries of what’s possible, I’m excited to see how mainframes will shape our digital future.

Note

I was asked to explain CP/CMS since there are many people who were not aware of it. So, CP/CMS, short for Control Program/Cambridge Monitor System, was introduced in the late 1960s and served as the foundation for IBM’s VM operating system, which debuted in 1972. CP handled the virtual machine functionality, while CMS operated as a lightweight, user-friendly operating system, running in a separate virtual machine for each user. This setup enabled users to easily create and edit files within their own isolated environments.

The CP/CMS system was a revolutionary milestone in operating system design, allowing multiple users to run individual virtual machines on a single physical computer. This groundbreaking concept, now known as virtualization, has since become a cornerstone of modern computing, powering countless advancements in efficiency and resource management.

Click here for a post on the evolution of computer programming.